This week, I’m featuring some of the best blog posts written by the students on my first year undergraduate course, PMU199 Climate Change: Software, Science and Society. This post is by Harry, and it first appeared on the course blog on January 29.

Projections from global climate models indicate that continued 21st century increases in emissions of greenhouse gases will cause the temperature of the globe to increase by a few degrees. These global changes in a few degrees could have a huge impact on our planet. Whether a few global degrees cooler could lead to another ice age, a few global degrees warmer enables the world to witness more of nature’s most terrifying phenomenon.

Projections from global climate models indicate that continued 21st century increases in emissions of greenhouse gases will cause the temperature of the globe to increase by a few degrees. These global changes in a few degrees could have a huge impact on our planet. Whether a few global degrees cooler could lead to another ice age, a few global degrees warmer enables the world to witness more of nature’s most terrifying phenomenon.

According to Anthony D. Del Genio the surface of the earth heats up from sunlight and other thermal radiation, the amount of energy accumulated must be offset to maintain a stable temperature. Our planet does this by evaporating water that condenses and rises upwards with buoyant warm air. This removes any excess heat from the surface and into higher altitudes. In cases of powerful updrafts, the evaporated water droplets easily rise upwards, supercooling them to a temperature between -10 and -40°C. The collision of water droplets with soft ice crystals forms a dense mixture of ice pellets called graupel. The densities of graupel and ice crystals and the electrical charges they induce are two essential factors in producing what people see as lightning.

Ocean and land differences in updrafts also cause higher lightning frequencies. Over the course of the day, heat is absorbed by the oceans and hardly warms up. Land surfaces, on the other hand, cannot store heat and so they warm significantly from the beginning of the day. The great deal of the air above land surfaces is warmer and more buoyant than that over the oceans, creating strong convective storms as the warm air rises. The powerful updrafts, as a result of the convective storms, are more prone to generate lightning.

According to the general circulation model by Goddard Institute for Space Studies, one of the two experiments conducted indicates that a 4.2°C global warming suggests an increase of 30% in global lightning activity. The second experiment indicated that a 5.9°C global cooling would cause a 24% decrease in global lightning frequencies. The summaries of the experiments signifies a 5-6% change in global lightning frequency for every 1°C of global warming or cooling.

As 21st century projections of carbon dioxide and other greenhouse gases emission remain true, the earth continues to warm and the ocean evaporates more water. This is largely because the drier land surface is unable to evaporate water at the same extent as the oceans, causing the land to warm more. This should cause stronger convective storms and produce higher lightning occurrence.

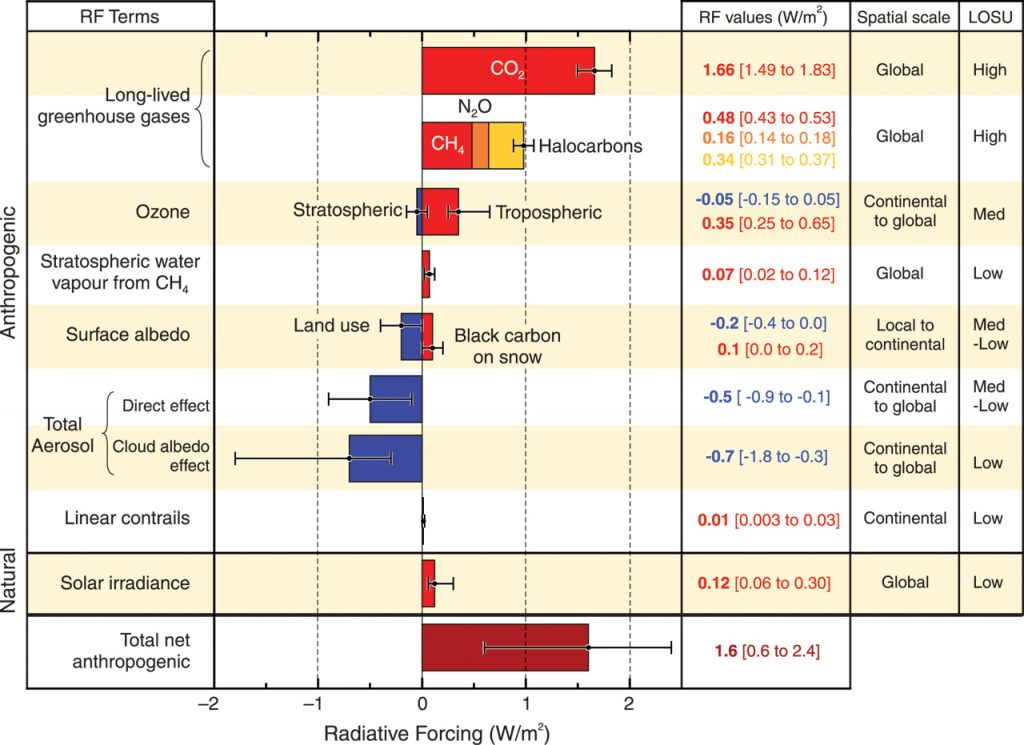

Greater lightning frequencies can contribute to a warmer earth. Lightning provides an abundant source of nitrogen oxides, which is a precursor for ozone production in the troposphere. The presence of ozone in the upper troposphere acts as a greenhouse gas that absorbs some of the infrared energy emitted by earth. Because tropospheric ozone traps some of the escaping heat, the earth warms and the occurence of lightning is even greater. Lightning frequencies creates a positive feedback process on our climate system. The impact of ozone on the climate is much stronger than carbon, especially on a per-molecule basis, since ozone has a radiative forcing effect that is approximately 1,000 times as powerful as carbon dioxide. Luckily, the presence of ozone in the troposphere on a global scale is not as prevalent as carbon and its atmospheric lifetime averages to 22 days.

"Climate simulations, which were generated from four Global General Circulation Models (GCM), were used to project forest fire danger levels with relation to global warming."

Lightning occurs more frequently around the world, however lightning only affects a very local scale. The local effect of lightning is what has the most impact on people. In the event of a thunderstorm, an increase in lightning frequencies places areas with high concentration of trees at high-risk of forest fire. Such areas in Canada are West-Central and North-western woodland areas where they pose as major targets for ignition by lightning. In fact, lightning accounted for 85% of that total area burned from 1959-1999. To preserve habitats for animals and forests for its function as a carbon sink, strenuous pressure on the government must be taken to ensure minimized forest fire in the regions. With 21st century estimates of increased temperature, the figure of 85% of area burned could dramatically increase, burning larger lands of forests. This is attributed to the rise of temperatures simultaneously as surfaces dry, producing more “fuel” for the fires.

Although lightning has negative effects on our climate system and the people, lightning also has positive effects on earth and for life. The ozone layer, located in the upper atmosphere, prevents ultraviolet light from reaching earth’s surface. Also, lightning causes a natural process known as nitrogen fixation. This process has a fundamental role for life because fixed nitrogen is required to construct basic building blocks of life (e.g. nucleotides for DNA and amino acids for proteins).

Lightning is an amazing and natural occurrence in our skies. Whether it’s a sight to behold or feared, we’ll see more of it as our earth becomes warmer.