I’ve just uploaded my Keystone XL pipeline letter to the Million Letter March site. If you haven’t already done so, write your own letter and submit it too (the site has some good tips for making the letters personal).

Here’s an announcement for a Workshop on Climate Knowledge Discovery, to be held at the Supercomputing 2011 Conference in Seattle on 13 November 2011.

Numerical simulation based science follows a new paradigm: its knowledge discovery process rests upon massive amounts of data. We are entering the age of data intensive science. Climate scientists generate data faster than can be interpreted and need to prepare for further exponential data increases. Current analysis approaches are primarily focused on traditional methods, best suited for large-scale phenomena and coarse-resolution data sets. Tools that employ a combination of high-performance analytics, with algorithms motivated by network science, nonlinear dynamics and statistics, as well as data mining and machine learning, could provide unique insights into challenging features of the Earth system, including extreme events and chaotic regimes. The breakthroughs needed to address these challenges will come from collaborative efforts involving several disciplines, including end-user scientists, computer and computational scientists, computing engineers, and mathematicians.

The SC11 CKD workshop will bring together experts from various domains to investigate the use and application of large-scale graph analytics, semantic technologies and knowledge discovery algorithms in climate science. The workshop is the second in a series of planned workshops to discuss the design and development of methods and tools for knowledge discovery in climate science.

Proposed agenda topics include:

- Science Vision for Advanced Climate Data Analytics

- Application of massive scale data analytics to large-scale distributed interdisciplinary environmental data repositories

- Application of networks and graphs to spatio-temporal climate data, including computational implications

- Application of semantic technologies in climate data information models, including RDF and OWL

- Enabling technologies for massive-scale data analytics, including graph construction, graph algorithms, graph oriented computing and user interfaces.

The first Climate Knowledge Discovery (CKD) workshop was hosted by the German Climate Computing Center (DKRZ) in Hamburg, Germany from 30 March to 1 April 2011. This workshop brought together climate and computer scientists from major US and European laboratories, data centers and universities, as well as representatives from the industry, the broader academic, and the Semantic Web communities. Papers and presentations are available online.

We hope that you will be able to participate and look forward to seeing you in Seattle. For further information or questions, please do not hesitate to contact any of the co-organizers:

- Reinhard Budich – MPI für Meteorologie (MPI-M)

- John Feo – Pacific Northwest National Laboratory (PNNL)

- Per Nyberg – Cray Inc.

- Tobias Weigel – Deutsches Klimarechenzentrum GmbH (DKRZ)

Today there was a mass demonstration in Ottawa against the Keystone XL pipeline. The protest in Canada is largely symbolic, as our prime minister has already given the project the go-ahead. But in the US, a similar protest in Washington really does matter, because the decision on whether to allow the project to go ahead will rest squarely with the president.

Others have eloquently explained the issue. I would recommend ClimateSight for a recent overview & background on the protests, and David Pritchard for a sober analysis of the emissions impact.

As I couldn’t make it to Ottawa to make my voice heard, I’ve drafted a letter to Obama. Feel free to suggestion improvements; I plan to send it later this week.

—

President Obama,

When you were elected as president you spoke eloquently about climate change: “Now is the time to confront this challenge once and for all. Delay is no longer an option. Denial is no longer an acceptable response.” And you repeated your campaign promise to work towards an 80% cut in emissions by 2050. These were fine words, but what about the tough decisions needed to deliver on such a promise?

Sometime in the next few months, you will have to make a decision on whether to allow the Keystone XL pipeline project to go ahead. The pipeline represents a key piece of the infrastructure that will bring onstream one of the largest oil deposits in the world, and a source of liquid fuel that’s somewhere between 15 to 40% worse in terms of carbon emissions per gallon than conventional oil (Pritchard, 2009). The question over whether we will dig up the tar sands and burn them for fuel represents a crucial decision point for the people of the entire planet.

If the pipeline goes ahead, many jobs will be created, and certain companies will make huge profits. On the other hand, if the pipeline does not go ahead, and we decide that instead of investing in extracting the oil sands, we invest instead in clean energy, other companies will make the profits, and other jobs will be created elsewhere. So, despite what many advocates for the pipeline say, this isn’t about jobs and the economy. It’s about what type of energy we choose to use for the next few decades, and which companies get to profit from it.

I’m a professor in Computer Science. My research focusses on climate models and how they are developed, tested, and used. While my background is in software and systems engineering, I specialize in the study of complex systems, and how they can be understood and safely controlled. In my studies of climate models, I’ve been impressed with the diligence and quality of the science that has gone into them, and the care that climate scientists take in checking and rechecking their results, and ensuring they’re not over-interpreted.

I’ve seen many talks by these scientists in which they reluctantly conclude that we’ve transgressed a number of planetary boundaries (Rockstrom 2010). Nobody wants this to be true, but it is. Yet these scientists are continually attacked by people who can’t (or don’t want to) accept this truth. The world is deeply in denial about this, and we’re in desperate need of strong, informed leadership to push us onto a different path. We need an intervention.

The easy part has been done. The science has been assessed and summarized by the IPCC and the national academies. The world’s governments have peered into the future and collectively agreed that a global temperature rise of more than 2°C would be disastrous (Randalls, 2010). It doesn’t sound like much, but it’s enough to take us to a global climate that’s hotter than at any time since humans appeared on the planet.

Now the tough decisions have to be made for how to ensure we don’t exceed such a limit. One of the hardest dilemmas we face is that a significant fraction of the world’s fossil fuel reserves must remain in the ground if we are to stay within the 2°C target. Some recent results from modelling studies allow us to estimate how much of the worlds remaining fossil fuel reserves must remain unexploited (Allen et al, 2009).

Cumulatively, since the dawn of the industrial era, humanity has burnt enough fossil fuels to release about 0.5 trillion tonnes of carbon. Some of this stays in the atmosphere and upsets the earth’s energy balance. Some of it is absorbed by the oceans, making them more acidic. The effect on the climate has been to raise global temperatures by about 0.7°C, with about half as much again owed to us, because the temperature response lags the atmospheric change by many years, and because some of the effect has been masked by other forms of short-lived pollution.

If we want to contain rising global temperatures to stay within the target of no more than 2°C of warming, then humanity cannot burn more than about another 0.5 trillion tonnes. Ever. This isn’t something we can negotiate with the planet on. It isn’t something we can trade off for a little extra economic growth. It is a physical limit. The number is approximate, so we might get away with a little more, but there’s enough uncertainty that we ought to be following good engineering practice and working with some significant safety margins.

Reasonable estimates of the world’s remaining fossil fuel reserves tell us that there is enough to release at least double this amount of carbon. So we have to find a way to leave around half these reserves untapped (Monbiot 2009; Easterbrook 2009). That’s a problem we’ve never solved before: how can we afford to leave such valuable commodities buried under the ground forever?

The only sensible answer is to use that fraction that we can burn as a transition fuel to effect a rapid switch to alternative energy sources. So it’s not so much a question of leaving resources alone, or failing to exploit opportunities for economic development; it’s more a question of choosing to invest differently. Instead of seeking to extract ever more sources of oil and coal, we should be investing in more efficient wind, solar, hydro and geothermal energy, and building the infrastructure to transition to these fuels.

Which brings us to the decision on the Keystone XL pipeline. This pipeline is significant, as it represents a vital piece of the infrastructure that will bring a major additional source of fossil fuels onstream, one that produces far more emissions per unit of energy than conventional oil, and that has the potential, if fully exploited, to double again the emissions from oil reserves. If we decide to build this infrastructure, it will be impossible to pull back – we’re committing the world to burning a whole new source of fossil fuels.

So the Keystone XL pipeline might be one of the most important decisions you ever get to make as president. It will define your legacy. Will this be the moment that you committed us to a path that will make exceeding the 2°C limit inevitable? Or will it be the moment when you draw a line in the sand and began the long struggle to wean us off our oil addiction?

That oil addiction is accidentally changing the planet’s life support system. The crucial question now is can we deliberately and collectively change it back again?

Yours faithfully,

Prof Steve Easterbrook.

Valdivino, who is working on a PhD in Brazil, on formal software verification techniques, is inspired by my suggestion to find ways to apply our current software research skills to climate science. But he asks some hard questions:

1.) If I want to Validate and Verify climate models should I forget all the things that I have learned so far in the V&V discipline? (e.g. Model-Based Testing (Finite State Machine, Statecharts, Z, B), structural testing, code inspection, static analysis, model checking)

2.) Among all V&V techniques, what can really be reused / adapted for climate models?

Well, I wish I had some good answers. When I started looking at the software development processes for climate models, I expected to be able to apply many of the [edit] formal techniques I’ve worked on in the past in Verification and Validation (V&V) and Requirements Engineering (RE). It turns out almost none of it seems to apply, at least in any obvious way.

Climate models are built through a long, slow process of trial and error, continually seeking to improve the quality of the simulations (See here for an overview of how they’re tested). As this is scientific research, it’s unknown, a priori, what will work, what’s computationally feasible, etc. Worse still, the complexity of the earth systems being studied means its often hard to know which processes in the model most need work, because the relationship between particular earth system processes and the overall behaviour of the climate system is exactly what the researchers are working to understand.

Which means that model development looks most like an agile software development process, where the both the requirements and the set of techniques needed to implement them are unknown (and unknowable) up-front. So they build a little, and then explore how well it works. The closest they come to a formal specification is a set of hypotheses along the lines of:

“if I change <piece of code> in <routine>, I expect it to have <specific impact on model error> in <output variable> by <expected margin> because of <tentative theory about climactic processes and how they’re represented in the model>”

This hypothesis can then be tested by a formal experiment in which runs of the model with and without the altered code become two treatments, assessed against the observational data for some relevant period in the past. The expected improvement might be a reduction in the root mean squared error for some variable of interest, or just as importantly, an improvement in the variability (e.g. the seasonal or diurnal spread).

The whole process looks a bit like this (although, see Jakob’s 2010 paper for a more sophisticated view of the process):

And of course, the central V&V technique here is full integration testing. The scientists build and run the full model to conduct the end-to-end tests that constitute the experiments.

And of course, the central V&V technique here is full integration testing. The scientists build and run the full model to conduct the end-to-end tests that constitute the experiments.

So the closest thing they have to a specification would be a chart such as the following (courtesy of Tim Johns at the UK Met Office):

This chart shows how well the model is doing on 34 selected output variables (click the graph to see a bigger version, to get a sense of what the variables are). The scores for the previous model version have been normalized to 1.0, so you can quickly see whether the new model version did better or worse for each output variable – the previous model version is the line at “1.0” and the new model version is shown as the coloured dots above and below the line. The whiskers show the target skill level for each variable. If the coloured dots are within the whisker for a given variable, then the model is considered to be within the variability range for the observational data for that variable. Colour-coded dots then show how well the current version did: green dots mean it’s within the target skill range, yellow mean it’s outside the target range, but did better than the previous model version, and red means it’s outside the target and did worse than the previous model version.

This chart shows how well the model is doing on 34 selected output variables (click the graph to see a bigger version, to get a sense of what the variables are). The scores for the previous model version have been normalized to 1.0, so you can quickly see whether the new model version did better or worse for each output variable – the previous model version is the line at “1.0” and the new model version is shown as the coloured dots above and below the line. The whiskers show the target skill level for each variable. If the coloured dots are within the whisker for a given variable, then the model is considered to be within the variability range for the observational data for that variable. Colour-coded dots then show how well the current version did: green dots mean it’s within the target skill range, yellow mean it’s outside the target range, but did better than the previous model version, and red means it’s outside the target and did worse than the previous model version.

Now, as we know, agile software practices aren’t really amenable to any kind of formal verification technique. If you don’t know what’s possible before you write the code, then you can’t write down a formal specification (the ‘target skill levels’ in the chart above don’t count – these aspirational goals rather than specifications). And if you can’t write down a formal specification for the expected software behaviour, then you can’t apply formal reasoning techniques to determine if the specification was met.

So does this really mean, as Valdivino suggests, that we can’t apply any of our toolbox of formal verification methods? I think attempting to answer this would make a great research project. I have some ideas for places to look where such techniques might be applicable. For example:

- One important built-in check in a climate model is ‘conservation of mass’. Some fluxes move mass between the different components of the model. Water is an obvious one – it’s evaporated from the oceans, to become part of the atmosphere, and is then passed to the land component as rain, thence to the rivers module, and finally back to the ocean. All the while, the total mass of water across all components must not change. Similar checks apply to salt, carbon (actually this does change due to emissions), and various trace elements. At present, such checks are this is built in to the models as code assertions. In some cases, flux corrections were necessary because of imperfections in the numerical routines or the geometry of the grid, although in most cases, the models have improved enough that most flux corrections have been removed. But I think you could automatically extract from the code an abstracted model capturing just the ways in which these quantities change, and then use a model checker to track down and reason about such problems.

- A more general version of the previous idea: In some sense, a climate model is a giant state-machine, but the scientists don’t ever build abstracted versions of it – they only work at the code level. If we build more abstracted models of the major state changes in each component of the model, and then do compositional verification over a combination of these models, it *might* offer useful insights into how the model works and how to improve it. At the very least, it would be an interesting teaching tool for people who want to learn about how a climate model works.

- Climate modellers generally don’t use unit testing. The challenge here is that they find it hard to write down correctness properties for individual code units. I’m not entirely clear how formal methods could help here, but it seems like someone with experience of patterns for temporal logic properties might be able to help here. Clune and Rood have a forthcoming paper on this in November’s IEEE Software. I suspect this is one of the easiest places to get started for software people new to climate models.

- There’s one other kind of verification test that is currently done by inspection, but might be amenable to some kind of formalization: the check that the code correctly implements a given mathematical formula. I don’t think this will be a high value tool, as the fortran code is close enough to the mathematics that simple inspection is already very effective. But occasionally a subtle bug slips through – for example, I came across an example where the modellers discovered they had used the wrong logarithm (loge in place of log10), although this was more due to lack of clarity in the original published paper, rather than a coding error.

Feel free to suggest more ideas in the comments!

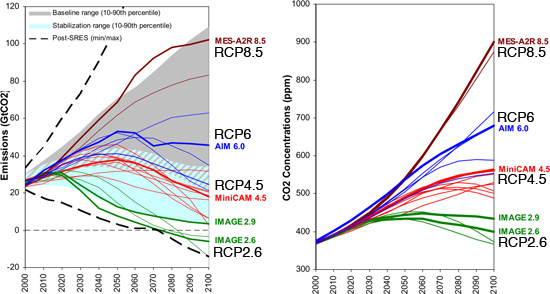

Over the next few years, you’re likely to see a lot of graphs like this (click for a bigger version):

This one is from a forthcoming paper by Meehl et al, and was shown by Jerry Meehl in his talk at the Annecy workshop this week. It shows the results for just a single model, CCSM4, so it shouldn’t be taken as representative yet. The IPCC assessment will use graphs taken from ensembles of many models, as model ensembles have been shown to be consistently more reliable than any single model (the models tend to compensate for each other’s idiosyncrasies).

But as a first glimpse of the results going into IPCC AR5, I find this graph fascinating:

- The extension of a higher emissions scenario out to three centuries shows much more dramatically how the choices we make in the next few decades can profoundly change the planet for centuries to come. For IPCC AR4, only the lower scenarios were run beyond 2100. Here, we see that a scenario that gives us 5 degrees of warming by the end of the century is likely to give us that much again (well over 9 degrees) over the next three centuries. In the past, people talked too much about temperature change at the end of this century, without considering that the warming is likely to continue well beyond that.

- The explicit inclusion of two mitigation scenarios (RCP2.6 and RCP4.5) give good reason for optimism about what can be achieved through a concerted global strategy to reduce emissions. It is still possible to keep emissions below 2 degrees of warming. But, as I discuss below, the optimism is bounded by some hard truths about how much adaptation will still be necessary – even in this wildly optimistic case, the temperature drops only slowly over the three centuries, and still ends up warmer than today, even at the year 2300.

As the approach to these model runs has changed so much since AR4, a few words of explanation might be needed.

First, note that the zero point on the temperature scale is the global average temperature for 1986-2005. That’s different from the baseline used in the previous IPCC assessment, so you have to be careful with comparisons. I’d much prefer they used a pre-industrial baseline – to get that, you have to add 1 (roughly!) to the numbers on the y-axis on this graph. I’ll do that throughout this discussion.

I introduced the RCPs (“Representative Concentration Pathways”) a little in my previous post. Remember, these RCPs were carefully selected from the work of the integrated assessment modelling community, who analyze interactions between socio-economic conditions, climate policy, and energy use. They are representative in the sense that they were selected to span the range of plausible emissions paths discussed in the literature, both with and without a coordinated global emissions policy. They are pathways, as they specify in detail how emissions of greenhouse gases and other pollutants would change, year by year, under each set of assumptions. The pathways matters a lot, because it is cumulative emissions (and the relative amounts of different types of emissions) that determine how much warming we get, rather than the actual emissions level in any given year. (See this graph for details on the emissions and concentrations in each RCP).

By the way, you can safely ignore the meaning of the numbers used to label the RCPs – they’re really just to remind the scientists which pathway is which. Briefly, the numbers represent the approximate anthropogenic forcing, in W/m², at the year 2100.

RCP8.5 and RCP6 represent two different pathways for a world with no explicit climate policy. RCP8.5 is at about the 90th percentile of the full set of non-mitigation scenarios described in the literature. So it’s not quite a worse case scenario, but emissions much higher than this are unlikely. One scenario that follows this path is a world in which renewable power supply grows only slowly (to about 20% of the global power mix by 2070) while most of a growing demand for energy is still met from fossil fuels. Emissions continue to grow strongly, and don’t peak before the end of the century. Incidentally, RCP8.5 ends up in the year 2100 with a similar atmospheric concentration to the old A1FI scenario in AR4, at around 900ppm CO2.

RCP6 (which is only shown to the year 2100 in this graph) is in the lower quartile of likely non-mitigation scenarios. Here, emissions peak by mid-century and then stabilize at a little below double current annual emissions. This is possible without an explicit climate policy because under some socio-economic conditions, the world still shifts (slowly) towards cleaner energy sources, presumably because the price of renewables continues to fall while oil starts to run out.

The two mitigation pathways, RCP2.6 and RCP4.5 bracket a range of likely scenarios for a concerted global carbon emissions policy. RCP2.6 was explicitly picked as one of the most optimistic possible pathways – note that it’s outside the 90% confidence interval for mitigation scenarios. The expert group were cautious about selecting it, and spent extra time testing its assumptions before including it. But it was picked because there was interest in whether, in the most optimistic case, it’s possible to stay below 2°C of warming.

Most importantly, note that one of the assumptions in RCP2.6 is that the world goes carbon-negative by around 2070. Wait, what? Yes, that’s right – the pathway depends on our ability to find a way to remove more carbon from the atmosphere than we produce, and to be able to do this consistently on a the global scale by 2070. So, the green line in the graph above is certainly possible, but it’s well outside the set of emissions targets currently under discussion in any international negotiations.

RCP4.5 represents a more mainstream view of global attempts to negotiate emissions reductions. On this pathway, emissions peak before mid-century, and fall to well below today’s levels by the end of the century. Of course, this is not enough to stabilize atmospheric concentrations until the end of the century.

The committee that selected the RCPs warns against over-interpretation. They deliberately selected an even number of pathways, to avoid any implication that a “middle” one is the most likely. Each pathway is the result of a different set of assumptions about how the world will develop over the coming century, either with, or without climate policies. Also:

- The RCPs should not be treated as forecasts, nor bounds on forecasts. No RCP represents a “best guess”. The high and low scenarios were picked as representative of the upper and lower ends of the range described in the literature.

- The RCPs should not be treated as policy prescriptions. They were picked to help answer scientific questions, not to offer specific policy choices.

- There isn’t a unique socio-economic scenario driving each RCP – there are multiple sets of conditions that might be consistent with a particular pathway. Identifying these sets of conditions in more detail is an open question to be studied over the next few years.

- There’s no consistent logic to the four RCPs, as each was derived from a different assessment model. So you can’t, for example, adjust individual assumptions to get from one RCP to another.

- The translation from emissions profiles (which the RCPs specify) into atmospheric concentrations and radiative forcings is uncertain, and hence is also an open research question. The intent is to study these uncertainties explicitly through the modeling process.

So, we have a set of emissions pathways chosen because they represent “interesting” points in the space of likely global socio-economic scenarios covered in the literature. These are the starting point for multiple lines of research by different research communities. The climate modeling community will use them as inputs to climate simulations, to explore temperature response, regional variations, precipitation, extreme weather, glaciers, sea ice, and so on. The impacts and adaptation community will use them to explore the different effects on human life and infrastructure, and how much adaptation will be needed under each scenario. The mitigation community will use them to study the impacts of possible policy choices, and will continue to investigate the socio-economic assumptions underlying these pathways, to give us a clearer account of how each might come about, and to produce an updated set of scenarios for future assessments.

Okay, back to the graph. This represents one of the first available sets of temperature outputs from a Global Climate Model for the four RCPs. Over the next two years, other modeling groups will produces data from their own runs of these RCPs, to give us a more robust set of multi-model ensemble runs.

So the results in this graph are very preliminary, but if the results from other groups are consistent with them, here’s what I think it means. The upper path, RCP8.5, offers a glimpse of what happens if economic development and fossil fuel use continue to grow they way they have over the last few decades. It’s hard to imagine much of the human race surviving the next few centuries under this scenario. The lowest path, RCP2.6, keeps us below the symbolically important threshold of 2 degrees of warming, but then doesn’t bring us down much from that throughout the coming centuries. And that’s a pretty stark result: even if we do find a way to go carbon-negative by the latter part of this century, the following two centuries still end up hotter than it is now. All the while that we’re re-inventing the entire world’s industrial basis to make it carbon-negative, we also have to be adapting to a global climate that is warmer than any experienced since the human species evolved.

[By the way: the 2 degree threshold is probably more symbolic than it is scientific, although there’s some evidence that this is the point above which many scientists believe positive feedbacks would start to kick in. For a history of the 2 degree limit, see Randalls 2010].

I’m on my way back from a workshop on Computing in the Atmospheric Sciences, in Annecy, France. The opening keynote, by Gerald Meehl of NCAR, gave us a fascinating overview of the CMIP5 model experiments that will form a key part of the upcoming IPCC Fifth Assessment Report. I’ve been meaning to write about the CMIP5 experiments for ages, as the modelling groups were all busy getting their runs started when I visited them last year. As Jerry’s overview was excellent, this gives me the impetus to write up a blog post. The rest of this post is a summary of Jerry’s talk.

Jerry described CMIP5 as “the most ambitious and computer-intensive inter-comparison project ever attempted”, and having seen many of the model labs working hard to get the model runs started last summer, I think that’s an apt description. More than 20 modelling groups around the world are expected to participate, supplying a total estimated dataset of more than 2 petabytes.

It’s interesting to compare CMIP5 to CMIP3, the model intercomparison project for the last IPCC assessment. CMIP3 began in 2003, and was, at that time, itself an unprecedented set of coordinated climate modelling experiments. It involved 16 groups, from 11 countries with 23 models (some groups contributed more than one model). The resulting CMIP3 dataset, hosted at PCMDI, is 31 terabytes, is openly accessible, has been accessed by more than 1200 scientists, has generated hundreds of papers, and use of this data is still ongoing. The ‘iconic’ figures for future projections of climate change in IPCC AR4 are derived from this dataset (see for example, Figure 10.4 which I’ve previously critiqued).

Most of the CMIP3 work was based on the IPCC SRES “what if” scenarios, which offer different views on future economic development and fossil fuel emissions, but none of which include a serious climate mitigation policy.

By 2006, during the planning the next IPCC assessment, it was already clear that a profound paradigm shift was in progress. The idea of climate services had emerged, with a growing demand from industry, government and other group for detailed regional information about the impacts of climate change, and, of course, a growing need to explicitly consider mitigation and adaptation scenarios. And of course the questions are connected: With different mitigation choices, what are the remaining regional climate effects that adaptation will have to deal with?

So, CMIP5 represents a new paradigm for climate change prediction:

- Decadal prediction, with high resolution Atmosphere-Ocean General Circulation Models (AOGCMs), with say, 50km grids, initialized to explore near-time climate change over the next three decades.

- First generation Earth System Models, with include a coupled carbon cycle, and ice sheet models, typically run at intermediate resolution (100-150km grids) to study longer term feedbacks past mid-century, using a new set of scenarios that include both mitigation and non-mitigation emissions profiles.

- Stronger links between communities – e.g. WCRP, IGBP, and the weather prediction community, but most importantly, stronger interaction between the three working groups of the IPCC: WG1 (which looks at the physical science basis), WG2 (which looks at impacts, adaptation and vulnerability), and WG3 (integrated assessment modelling and scenario development). The lack of interaction between WG1 and the others has been a problem in the past, especially as it’s WG2 and WG3 before, as they’re the ones trying to understand the impacts of different policy choices.

The model experiments for CMIP5 are not dictated by IPCC, but selected by climate science community itself. A large set of experiments have been identified, intended to provide a 5-year framework (2008-2013) for climate change modelling. As not all modelling groups will be able to run all the experiments, they have been prioritized into three clusters: A core set that everyone will run, and two tiers of optional experiments. Experiments that are completed by early 2012 will be analyzed in the next IPCC assessment (due for publication in 2013).

The overall design for the set of experiments is broken out into two clusters (near-term, i.e. decadal runs; and long-term, i.e. century and longer), design for different types of model (although for some centres, this really means different configurations of the same model code, if their models can be run at very different resolutions). In both cases, the core experiment set includes runs of both past and future climate. The past runs are used as hindcasts to assess model skill. Here’s the decadal experiments, showing the core set in the middle, and tier 1 around the edge (there’s no tier 2 for these, as there aren’t so many decadal experiments:

These experiments include some very computationally-demanding runs at very high resolution, and include the first generation of global cloud-resolving models. For example, the prescribed SST time-slices experiments include two periods (1979-2008 and 2026-2035) where prescribed sea-surface temperatures taken from lower resolution, fully-coupled model runs will be used as a basis for very high resolution atmosphere-ocean runs. The intent of these experiments is to explore the local/regional effects of climate change, including on hurricanes and extreme weather events.

Here’s the set of experiments for the longer-term cluster, marked up to indicate three different uses: Model evaluation (where the runs can be compared to observations to identify weakness in the models and explore reasons for divergences between the models); climate projections (to show what the models do on four representative scenarios, at least to the year 2100, and, for some runs, out to the year 2300); and understanding, (including thought experiments, such as the Aqua planet with no land mass, and abrupt changes in GHG concentrations):

These experiments include a much wider range of scientific questions than earlier IPCC assessment (which is why there are so many more experiments this time round). Here’s another way of grouping the long-term runs, showing the collaborations with the many different research communities who are participating:

With these experiments, some crucial science questions will be addressed:

- what are the time-evolving changes in regional climate change and extremes over the next few decades?

- what are the size and nature of the carbon cycle and other feedbacks in the climate system, and what will be the resulting magnitude of change for different mitigation scenarios?

The long-term experiments are based on a new set of scenarios that represent a very different approach than was used in the last IPCC assessment. The new scenarios are called Representative Concentration Pathways (RCPs), although as Jerry points out, the name is a little confusing. I’ll write more about the RCPs in my next post, but here’s a brief summary…

The RCPs were selected after a long a series of discussion with the integrated assessment modelling community. A large set of possible scenarios were whittled down to just four. For the convenience of the climate modelling community, they’re labelled with the expected anomaly in radiative forcing (in W/m²) by the year 2100, to give us the set {RCP2.6, RCP4.5, RCP6, RCP8.5}. For comparison, the current total radiative forcing due to anthropogenic greenhouse gases is about 2W/m². But really, the numbers are just to help remember which RCP is which. Really, the term pathway is the important part – each of the four was chosen as an illustrative example of how greenhouse gas concentrations might change over the rest of the century, under different circumstances. They were generated from integrated assessment models that provide detailed emissions profiles for a wide range of different greenhouse gases and other variables (e.g. aerosols). Here’s what the pathways look like (the darker coloured lines are the chosen representative pathways, the thinner lines show others that were consided, and each cluster is labelled with the model that generated them (click for bigger):

Each RCP was produced by a different model, in part because no single model was capable of providing the detail needed for all four different scenarios, although this means that the RCPs cannot be directly compared, because they include different assumptions. The graph above shows the range of mitigation scenarios considered by the blue shading, and the range of non-mitigation scenarios with gray shading (the two areas overlap a little).

Here’s a rundown on the four scenarios:

- RCP2.6 represents the lower end of possible mitigation strategies, where emissions peak in the next decade or so, and then decline rapidly. This scenario is only possible if the world has gone carbon-negative by the 2070s, presumably by developing wide-scale carbon-capture and storage(CCS) technologies. This might be possible with an energy mix by 2070 of at least 35% renewables, 45% fossil fuels with full CCS (and 20% without), along with use of biomass, tree planting, and perhaps some other air-capture technologies. [My interpretation: this is the most optimistic scenario, in which we manage to do everything short of geo-engineering, and we get started immediately].

- RCP4.5 represents a less aggressive emissions mitigation policy, where emissions peak before mid-century, and then fall, but not to zero. Under this scenario, concentrations stabilize by the end of the century, but won’t start falling, so the extra radiative forcing at the year 2100 is still more than double what it is today, at 4.5W/m². [My interpretation: this is the compromise future in which most countries work hard to reduce emissions, with a fair degree of success, but where CCS turns out not to be viable for massive deployment].

- RCP6 represents the more optimistic of the non-mitigation futures. [My interpretation: this scenario is a world without any coordinated climate policy, but where there is still significant uptake of renewable power, but not enough to offset fossil-fuel driven growth among developing nations].

- RCP8.5 represents the more pessimistic of the non-mitigation futures. For example, by 2070, we would still be getting about 80% of the world’s energy needs from fossil fuels, without CCS, while the remaining 20% come from renewables and/or nuclear. [My interpretation: this is the closest to the “drill, baby, drill” scenario beloved of certain right-wing American politicians].

Jerry showed some early model results for these scenarios from the NCAR model, CCSM4, but I’ll save that for my next post. To summarize:

- 24 modelling groups are expected to participate in CMIP5, and about 10 of these groups have fully coupled earth system models.

- Data is currently in from 10 groups, covering 14 models. Here’s a live summary, currently showing 172TB, which is already more than 5 times all the model data for CMIP3. Jerry put the total expected data at 1-2 petabytes, although in a talk later in the afternoon, Gary Strand from NCAR pegged it at 2.2PB. [Given how much everyone seems to have underestimated the data volumes from the CMIP5 experiments, I wouldn’t be surprised if it’s even bigger. Sitting next to me during Jerry’s talk, Marie-Alice Foujols from IPSL came up with an estimated of 2PB just for all the data collected from the runs done at IPSL, of which she thought something like 20% would be submitted to the CMIP5 archive].

- The model outputs will be accessed via the Earth System Grid, and will include much more extensive documentation than previously. The Metafor project has built a controlled vocabulary for describing models and experiments, and the Curator project has developed web-based tools for ingesting this metatdata.

- There’s a BAMS paper coming out soon describing CMIP5.

- There will be a CMIP5 results session at the WCRP Open science conference next month, another at the AGU meeting in December, and another at a workshop in Hawaii in March.

Al Gore is part way through a 24-hour, round-the-globe, live broadcast, called Climate Reality. I haven’t been able to tune in as I’m travelling, but it includes broadcasts from NCAR in Boulder, and the University of Victoria. I hope it helps get the science across, but 24 hours seems like a lot in today’s soundbite world.

I’m struck by the thought that one of the key problems for getting the message across is the complexity of the problem, and the lack of a simple slogan to unite everyone. I came up with a design for a T-shirt, but somehow it doesn’t resonate:

Hmm, actually I quite like it. Maybe I should have some T-shirts printed…

Hmm, actually I quite like it. Maybe I should have some T-shirts printed…

I’ve been invited to give a guest seminar to the Dynamics of Global Change core course, which is being run this year by Prof Robert Vipond, of the Munk School of Global Affairs. The course is an inter-disciplinary exploration of globalization (and especially global capitalism) as a transformative change to the world we live in. (One of the core texts is Jan Aart Scholte’s Globalization: A Critical Introduction).

My guest seminar, which I’ve titled “Climate Change as a Global Challenge“, comes near the middle of the course, among a series of different aspects of globalization, including international relations, global mortality, humanitarianism, and human security. I had to provide some readings for the students, and had an interesting time whittling it down to a manageable set (they’ll only get 1 week in which to read them). Here’s what I came up with, and some rationale for why I picked them:

- Kartha S, Siebert CK, Mathur R, et al. A Copenhagen Prognosis: Towards a Safe Climate Future.

I picked this as a short (12 page) overview of the latest science and policy challenges. I was going to use the much longer Copenhagen Diagnosis, but at 64 pages, I thought it was probably a bit much, and anyway, it’s missing the discussion about emissions allocations (see fig 11 of the Prognosis report), which is a nice tie in to the globalization and international politics themes of the course… - Rockström J, Steffen W, Noone K, et al. A Safe Operating Space for Humanity. Nature. 2009;461(7263):472–475.

This one’s very short (4 pages) and gives a great overview of the concept of planetary boundaries. It also connects up climate change with a set of related boundary challenges. And it’s rapidly become a classic. - Müller P. Constructing climate knowledge with computer models. Wiley Interdisciplinary Reviews: Climate Change. 2010.

A little long, but it’s one of the best overviews of the role of modeling in climate science that I’ve ever seen. As part of the aim of the course is to examine the theoretical perspectives and methodologies of different disciplines, I want to spend some time in the seminar talking about what’s in a climate model, and how they’re used. I picked Müller over and above another great paper, Moss et al on the next generation of scenarios, which is an excellent discussion of how scenarios are developed and used. However, I think Müller is a little more readable, and covers more aspects of the modeling process. - Jamieson D. The Moral and Political Challenges of Climate Change. In: Moser SC, Dilling L, eds. Creating A Climate for Change. Cambridge University Press; 2006:475-482.

Nice short, readable piece on climate ethics, as an introduction to issues of equity and international justice…

So that’s the readings. What do you all think of my choice?

I had to sacrifice another set of readings I’d picked out on Systems Thinking and Cybernetics, for which I was hoping to use at least the first chapter of Donella Meadows’ book, because it offers another perspective on how to link up global problems and our understanding of the climate system. But that will have to wait for a future seminar…

Excellent news: Our study of the different meanings scientists ascribe to concepts such as openness and reproducibility is published today in PLoS ONE. It’s an excellent read. And it’s in an open access journal, so everyone can read it (just click the title):

On the Lack of Consensus over the Meaning of Openness: An Empirical Study

Alicia Grubb and Steve M. Easterbrook

Abstract: This study set out to explore the views and motivations of those involved in a number of recent and current advocacy efforts (such as open science, computational provenance, and reproducible research) aimed at making science and scientific artifacts accessible to a wider audience. Using a exploratory approach, the study tested whether a consensus exists among advocates of these initiatives about the key concepts, exploring the meanings that scientists attach to the various mechanisms for sharing their work, and the social context in which this takes place. The study used a purposive sampling strategy to target scientists who have been active participants in these advocacy efforts, and an open-ended questionnaire to collect detailed opinions on the topics of reproducibility, credibility, scooping, data sharing, results sharing, and the effectiveness of the peer review process. We found evidence of a lack of agreement on the meaning of key terminology, and a lack of consensus on some of the broader goals of these advocacy efforts. These results can be explained through a closer examination of the divergent goals and approaches adopted by different advocacy efforts. We suggest that the scientific community could benefit from a broader discussion of what it means to make scientific research more accessible and how this might best be achieved.

For the Computing in Atmospheric Sciences workhop next month, I’ll be giving a talk entitled “On the relationship between earth system models and the labs that build them”. Here’s the abstract:

In this talk I will discuss a number of observations from a comparative study of four major climate modeling centres:

– the UK Met Office Hadley Centre (UKMO), in Exeter, UK

– the National Centre for Atmospheric Research (NCAR) in Boulder Colorado,

– the Max-Planck Institute for Meteorology (MPI-M) in Hamburg, Germany

– the Institute Pierre Simon Laplace (IPSL) in Paris, France).

The study focussed on the organizational structures and working practices at each centre with respect to earth system model development, and how these affect the history and current qualities of their models. While the centres share a number of similarities, including a growing role for software specialists and greater use of open source tools for managing code and the testing process, there are marked differences in how the different centres are funded, in their organizational structure and in how they allocate resources. These differences are reflected in the program code in a number of ways, including the nature of the coupling between model components, the portability of the code, and (potentially) the quality of the program code.While all these modelling centres continually seek to refine their software development practices and the software quality of their models, they all struggle to manage the growth (in terms of size and complexity) in the models. Our study suggests that improvements to the software engineering practices at the centres have to take account of differing organizational constraints at each centre. Hence, there is unlikely to be a single set of best practices that work anywhere. Indeed, improvement in modelling practices usually come from local, grass-roots initiatives, in which new tools and techniques are adapted to suit the context at a particular centre. We suggest therefore that there is need for a stronger shared culture of describing current model development practices and sharing lessons learnt, to facilitate local adoption and adaptation.

Here’s some events related to climate modeling and software/informatics that look interesting for the rest of this year. I won’t be able to make it to all of them (I’m trying to cut down on travel, for various reasons), but they all look tempting:

- The First International Workshop on Climate Informatics, at the New York Academy of Sciences, August 26, 2011. This is organised jointly by Columbia University and NASA GISS, and aims to bring together folks working in computational intelligence and climate science. Unfortunately, I can’t go, it’s family vacation time. Hope there’s another next year…

- Computing in Atmospheric Sciences Workshop, in Annecy, France, Sept 11-14, 2011. I’ve been invited to speak at this one. Gotta register this week to get the cheaper registration…

- the World Climate Research Program’s Open Science Conference, “Climate Research in Service to Society”, in Denver, USA, Oct 24-28, 2011. I’ll be going to this one, to present a poster on the work of the Benchmarking and Assessment Working Group of the WMO’s Surface Temperature Initiative.

And then of course, in December, it’s the AGU Fall Meeting. Abstracts are due tomorrow, so we’ll be busy for the next 24 hours. Here’s a selection of conference tracks that look fascinating to me. In the Union sessions there some tracks that look at the big picture:

- U10 Climate Confluence Issues (Energy, Environment, Economics, Security)

- U15 Data and Information Quality Really Matters in the Era of Predictive and Often Contentious Science

- U18 Effectively Communicating Climate Science (How to Address Related Issues)

- U20 Geoengineering Research Policy

In the Education sessions, they’ve introduced a whole set of tracks on climate literacy:

- ED08 Climate Change Education: What Educational Research Reveals About Teaching and Learning About Climate Change

- ED09 Climate Literacy: Addressing Barriers to Climate Literacy – What Does the Research Tell Us?

- ED10 Climate Literacy: Evidence of Progress in Improving Climate Literacy

- ED11 Climate Literacy: Higher Education Responding to Climate Change

- ED12 Climate Literacy: Integrating Research and Education, Science & Solutions

- ED13 Climate Literacy: New Approaches for Tackling Complex and Contentious Issues in Museums, Zoos and Aquariums

- ED14 Climate Literacy: Pre-college Activities That Support Climate Science Careers and Climate Conscious Citizens

- ED15 Climate Literacy: The Role of Belief, Trust and Values in Climate Change Science Education Efforts

And of course, many sessions on climate modeling and climate data in the Global Environmental Change sessions. I’ll go to many of these, but the following are ones I’ve especially enjoyed in previous years:

- GC05 Climate Modeling 3. Uncertainty Quantification and its Application to Climate Change

- GC06 Climate Modeling 4. Methodologies of Climate Model Evaluation, Confirmation and Interpretation

Of course, the Informatics sessions are where all the action is. I’m glad to there’s a track on Software Engineering Challenges again this year, and there are some interesting sessions on visualization, decision support, open source and data quality (among my pet themes!):

- IN07 Climate Knowledge Discovery, Integration and Visualization

- IN08 Computational and Software Engineering Challenges in Earth Science

- IN09 Creating Decision Support Products in a Rapidly Changing Environment

- IN30 Software Reuse and Open Source Software in Earth Science

- IN31 The Challenge of Data Quality in Earth Observations and Modeling

Finally, a couple of session in the Public Affairs division look interesting:

Phew. Look’s like it’ll be a busy week.

My wife and I have been (sort of) vegetarian for twenty years now. I say “sort of” because we do eat fish regularly, and we’re not obsessive about avoiding foods that might have animal stock in them. More simply, we avoid eating meat. However I often find it hard to explain this to people, partly because it doesn’t seem to fit with anyone’s standard notion of vegetarianism. Reaction tend to fall somewhere between being weirded out by the idea of a diet that’s not based on meat to a detailed quizzing of how I decide which creatures I’m willing to eat. The trouble is, our decision to avoid meat isn’t based on a concern for animals at all.

The reason we went meat-free many years ago was threefold: it’s cheaper, healthier and better for the planet. Once we’d done it, we also found ourselves eating a more varied and flavourful diet, but that was really just a nice side-effect.

The “better for the planet” part was based on several years of reading about issues in global equity and food production, which I have found hard to explain to people in a succinct way. In other words, yes, it would require me to be boring for an entire evening’s dinner party, which I would prefer not to do.

Now I can explain it much more succinctly, with just one graph (h/t to Neil for this):

Whew, it’s hot out there today. Toronto was the hottest place in Canada this afternoon. The humidex hit 51. Environment Canada tells me that above 45 is dangerous, and around 54 means “heatstroke imminent”. I just stepped outside to see what it’s like and … it’s like nothing I’ve ever felt before. My better half has forbidden me from cycling home in this, so I’m pondering what to do next. This feels like a taste of “Our future on a hotter planet”. So, some idle thoughts…

To many people, living comfortable middle class lives in North America, climate change is some vague distant threat that will mainly affect the poor in other parts of the world. So it’s easy to dismiss, no matter how agitated the scientists get. If you follow this line of thinking, it quickly becomes clear why responses to climate change divide cleanly along political lines:

- If you care a lot about fairness and equity, climate change is an urgent, massive problem, because millions (maybe even billions) of poor people will suffer, die, or become refugees as the climate changes.

- On the other hand, if you’re comfortable with a world in which there are massive inequalities, where some people live rich lavish lifestyles while others starve to death, then climate change is a minor distraction. After all, famines in undeveloped countries are really nothing new, and we in the west are rich enough to adapt (Or are we?).

The dominant political ideology in the west (certainly in the English-speaking countries) is that such inequality is not just acceptable, but necessary. So it’s hardly surprising that right wing politicians dismiss climate change as irrelevant. No amount of science education will change the mind of people who believe, fundamentally, that they have no obligation to people who are less fortunate than themselves. As long as they believe that they are wealthy enough that climate change won’t affect them, that is.

But doesn’t a heatwave in a Canadian (!) city that makes it dangerous to be outside change things completely? We Canadians are used to the cold. We know how to dress up, and we embrace winter through a variety of winter sports. You can’t embrace extreme heat in the same way. If the body cannot cool down, you die. No matter how rich you are.

If people start to understand that this will be the new normal, it changes the issue from a question of equity to a question of health. Our elderly relatives are at risk first. And small children. But even a healthy adult can’t avoid heat stress if the body cannot cool down enough. What’s unusual about this heatwave (and the one that hit Europe in 2003) is that it doesn’t cool down much overnight. And nighttime temperatures are rising even faster than daytime temperatures. That’s a massive threat to public health, especially in cities.

And with that thought, how am I going to get home?

One of the favourite jokes amongst my kids right now is the one about Youtube, Twitter and Facebook all merging to make a new service called YouTwitFace:

And now, just as I was getting used to Twitter (I finally signed up in April), along comes Google+.

But I haven’t yet figured out how to connect up Twitter with my WordPress blog and my Facebook page. Facebook seems like a good way to keep in touch with people, except some of my “friends” keep polluting the news stream with stuff about their fantasy lives on virtual farms and stuff, and the whole thing seems overburdened with unnecessary features. Twitter seems to have a much better signal-to-noise ratio (although maybe that’s because I’m only following a bunch of workaholics, or at least people who keep their personal lives separate).

Now what I really want isn’t another competitor. I want an automated blend of the best aspects of the existing services. I want to combine my facebook newsfeed and my twitter feed, while filtering out the farmville crap. I want to automatically include the RSS feeds from blogs that I follow, but filter out duplicates for those people who also tweet and/or link their blog posts in facebook. I want my blog to create automatic tweets when I post (I’ve now tried several different WordPress plugins for this, and none of them work). And for tweets announcing new blog posts, I want more than Twitter offers – I at least want to know which blog it is. Twitter tends to give me just the title/subject of the post and a cryptic URL. I actually like Facebook’s approach best here, where you get a brief excerpt from the blog post in the feed.

And now if I start using Google+, I have another feed to blend in. Blending the feeds ought to be easy, from a technical point of view. But if it’s easy, why aren’t there simple pushbutton solutions already? Do I really have to write my own scripts for this? Does everyone?

It’s enough to make me sign up for the Slow Science Movement:

We are scientists. We don’t blog. We don’t twitter. We take our time.

Okay, so it’s a few years old, but I’d never seen this series of videos by National Geographic before. I’m not convinced you can slice the impacts into 1 degree increments with any reliability, but this series does a nice job of getting the big picture across (much better than Lynas’ book, which I found to be too lacking in narrative structure):

- Could Just One Degree Change the World?

- 2 Degress Warmer: Ocean Life in Danger

- 3 Degrees Warmer: Heat Wave Fatalities

- 4 Degrees Warmer: Great Cities Wash Away

- 5 Degrees Warmer: Civilization Collapses

- 6 Degrees Warmer: Mass Extinction?

What’s uncanny is how much of what’s presented in the 1 degree video is already happening now.