What unites both the climate crisis and the financial crisis? What is it that has driven scientists and environmentalists to risk arrest in protests across the world? What is it that’s driven people from all walks of life to show up in their thousands to occupy their cities? In both cases, there’s a growing sense that the system is fundamentally broken, and that our current political elites are unable (rather than just unwilling) to fix them. And in both cases, it’s becoming increasingly apparent that our current political system is a major cause of the problems. Which therefore makes it even harder to discover solutions.

So how do we make progress? If we’re going to take seriously the problems that have led people to take to the streets, then we have to understand the processes that are steadily driving us in the wrong direction when it comes to things we care about – a clean environment, a stable climate, secure jobs, a stable economy. In other words, we have to understand the underlying systems, understand the dynamics within those systems, and we have to find the right leverage points that would allow us to change those dynamics to work the way we would like.

Failure to take a systems view is evident throughout discussions of climate change, and now, more recently, throughout mainstream media discussions about the Occupy protests. Suggestions for what needs fixing tend to focus on superficial aspects of the systems that matter, mainly by tinkering with parameters (emissions targets, stabilization wedges, the size of the debt, the bank interest rate, etc). If the system itself is broken, you can’t fix it by adjusting its current parameters – you have to look at the underlying dynamics and change the structure of the system that gave rise to the problem in the first place. Most people are focusing on the wrong leverage points. Even worse, in some cases, they are pushing in the wrong direction on some of the leverage points…

Perhaps the best analysis of this I’ve ever seen is Donella Meadows’ essay on leverage points. [If you’re not already familiar with it, I highly recommend reading it before tackling the rest of this post]. Meadows has written some wonderfully accessible material on systems thinking, but only gives a very brief overview in this particular essay, because she’s focussing here on how to identify leverage points that allow one to alter a system. She identifies twelve places to look, and orders them, roughly, from the least effective to the most effective.

To illustrate the point, I’ll begin with a much simpler system than the ones we really want to fix. My example is the controllability of water temperature in a shower. The particular shower I have in mind is in a small hotel in Paris, and is a little antiquated, the result of old-fashioned plumbing. It takes time for the hot water to reach the shower head from the hot water tank, and there’s enough of a delay between the taps that control the hot and cold water and the temperature response, that you’re forever trying to adjust it to get a good temperature. It’s too cold, so you turn up the hot tap. The temperature barely seems to change, so you crank it up a lot. After a few minutes the water heats up so much it’s scalding. So you crank up the cold tap. Again, the temperature responds slowly until you realise it’s now too cold. You turn down the cold tap, and soon find it’s too hot again. And so on.

Does this remind you of the economy? Or, for that matter, the way the physical climate system works over the course of tens of thousands of years? More worryingly, it’s the outcome I expect if we ever try to geo-engineer our way out of extreme climate change. Right now, the human race is cranking up the hot tap. But the system responds very slowly. And by the time we’ve realized the heat has built up, we’ll have overshot our comfort zones. We’ll slam on the brakes and end up overcompensating. Because the system is just as hard to control (actually, a damn sight harder!) than that annoying shower in Paris.

Let’s look at how Meadows’ twelve leverage points might help us analyze the Parisian shower. At #12, we have what is usually the least effective place to seek change:

#12 Changes in constants, parameters, numbers. Example: Change the set point on the water tank thermostat. In general, such adjustments make no difference to the controllability of the shower. [There is an interesting exception, when we’re prepared to make really big adjustments. For example, if we crank the thermostat on the hot water tank all the way down to ‘pleasantly warm’ we’ll never have to balance the hot and cold taps again, we can just use the “hot” tap. Usually, such really big adjustments are unlikely to be made, for other reasons].

#11. Change the sizes of buffers and stocks relative to their flows. Example: Get a bigger hot water tank. This will make the energy bills bigger, but still won’t make the shower any more controllable.

#10. Change the structure of material stocks and flows. Example: Replace the water pipes with smaller diameter pipes. This might help, as it reduces the thermal mass of the pipes, and hence, may affect the lag between the water tank and the shower, leading to more responsive shower controls.

#9. Change the length of delays, relative to rate of system change. Example: Relocate the hot water tank closer to the bathroom; or Wait a little longer for temperature response to settle before touching the taps again. Such changes might be hard to achieve (hence they’re high up on the list), but very effective if we could do them.

#8. Increase the strength of negative feedback loops relative to the impacts they try to correct against. Example: Take a deep breath and calm down – you’re the negative feedback trying to keep the system stable. If you’re less impulsive on the taps, you’ll help to dampen the temperature fluctuations. If the system is changing too quickly, or is subject to instability, identifying the negative feedbacks, and working to strengthen them can often yield simple and effective leverage points. But when you’re in the shower getting scalded, it might be hard to remember this.

#7. Reduce the gain around positive feedback loops. Example: Replace the taps with ones that offer a finer level of control. This reduces the big temperature fluctuations when we turn the taps too quickly, and hence reduces the positive feedback loop that leads to temperature overshoot.

#6. Change the structure of information flows, to alter who does (or does not) have access to information. Example: Put an adjustable marker on the shower dial to record a preferred setting. Changing the flows of information about a system is generally much easier and cheaper than changing any other aspect of a system, hence, it’s often a more powerful leverage point than any of the above. For the shower, this one tiny fix may entirely cure the temperature fluctuation problem.

#5. Change the rules of the system (incentives, punishments, constraints). Example: Set limits on amount of time you can shower for. This might reduce the incentive to spend time fiddling with the temperature controls. But who will enforce the constraint?

#4. Nurture the power to add, change, evolve or self-organize system structure. Example: Teach yourself to tolerate a wider range of shower temperatures. or: Design a new automated temperature controller.

#3. Change the goal of the system. Example: Focus on getting clean quickly rather than getting the water to exactly the desired temperature. Of course, changing the goal of the system is hard, because it means changing people’s perceptions of the system itself.

#2. Change the mindset or paradigm out of which the system arises. Example: Is cleanliness over-rated? or: why stay in these antiquated hotels in Paris anyway? Paradigm shifts are hard to achieve, but when they happen, they have a dramatic transformative effect on the systems that arose in the previous paradigm.

#1. The power to transcend paradigms. Example: Learn systems thinking and gain the ability to understand a system from multiple perspectives; Realise that system structure and behaviour arises from the dominant paradigm; Explore how our own perspectives shape our interactions with the system.

Note that Meadows emphasizes the point that all twelve types of leverage point can be effective for changing systems, if you have a good understanding of how the system works, and can make good choices for where to make changes. However, in a self-perpectuating system, the dynamics that created the problem you’re trying to solve will also tend to defeat most kinds of change, unless they really do alter those dynamics in an important way.

Note that for many of these examples, I’ve chosen to include the person in the shower as part of my ‘shower system’. More importantly, some of my suggestions refer to how the person in the shower understands the shower system, and how her understanding of the system affects the system’s behaviour. This is to emphasize a mistake we often make when thinking about both the climate and the economy. In both cases, we have to understand the role that people play within these systems, and especially how our expectations and cultural norms themselves form part of the system. If people, in general, have the wrong mental model of how the systems work, it’s significantly more challenging to figure out how to fix things when they go wrong.

Let’s look at how the list of leverage points applies to the climate system and the financial system.

#12 Changes in Constants, parameters, numbers.

- Climate System: tighten pollution standards, negotiate stronger version of the Kyoto protocol, increase fuel taxes, etc.

- Financial System: change the interest base rates, increase size of stimulus spending, increase taxes, cut government spending, put caps on campaign contributions, increase the minimum wage, vote for the other party.

While changing the parameters of the existing system can make a difference, it’s rare that it does. Systems tend to operate in regions where small parameter adjustments make no difference to the overall stability of the system. If there’s a systemic effect that is pushing a system in the wrong direction (dependence on fossil fuels, financial instability, poverty, etc), then adjusting the system’s parameters is unlikely to make much difference, if you don’t also change the structure of the system.

None of these examples are likely to make much difference to the underlying problems. To understand this point, you have to understand the system you’re dealing with. For example, the whole problem of climate change itself might appear to be the result of a small parameter change – a small increase in radiative forcing, caused by a small increase (measured in parts per million!) in atmospheric concentrations of certain gases. But that’s not the real cause. The real cause is a systemic change in human activity that traces back to the industrial revolution: a new source of energy was harnessed, which then kicked off mutually reinforcing positive feedback loops in human population growth and energy use. A few more parts per million of CO2 in the atmosphere is not the problem; the problem is a new exponential trend that did not exist previously.

However, remember there’s sometimes an exception, if you make very large adjustments. For the climate system, you could increase fuel taxes so that gas (petrol) costs, say, ten times as much as it does today. Such an adjustment would be guaranteed to change the system (but not perhaps, when the mobs are done with you, in the way you intended). Here’s an interesting rule of thumb: if you change any parameter in a system by an order of magnitude or more, what you get is an entirely different type of system. Try it: a twenty-storey building is fundamentally different from a 2-storey house. A ten-lane freeway is fundamentally different from a single lane road. A salary of $1 million is fundamentally different from a salary of $100K.

#11. Change the sizes of buffers and stocks relative to their flows

- Climate System: Plant more forests to create bigger carbon sinks. Ocean fertilization and/or artificial trees to soak up carbon, etc.

- Financial System: Increase the federal reserve, require banks to hold larger reserves, increase debt ceiling limits.

In many systems these are hard to change, as they require large investments in infrastructure (the canonical example is a large dam to create a buffer in the water supply). This also means it can be hard to make frequent, fine-grained adjustments. More importantly, they tie up resources – keeping a large stock means that the stock isn’t working for you: your bigger water tank will make your energy bills much higher (and won’t affect your shower adjustment problem anyway). Making banks keep larger reserves will make them much less dynamic, and will reduce the funds available for lending.

All of these things might ease the problem a little, but none of them will make any significant difference to the cause of the problem. No matter how big you make the reserves, you’ll quickly be defeated by the exponential growth curves that you didn’t tackle.

#10. Change the structure of material stocks and flows

- Climate System: Carbon Capture and Storage (diverts emissions at the point they are generated, so they don’t enter the atmosphere).

- Financial System: Create a Tobin tax, which diverts a small percentage of each financial transaction to create a new pool of money to fix problems. Create new kinds of super-tax on the very rich. Separate the high street banks from their gambling investment operations.

Physical structure is also, usually, very hard to change, once the system is operating, although it’s sometimes easier to change how things flow than it is to create new buffers. However, both types of change tend to have limited impact, because the stocks and flows arise from the nature of the system – which means the system itself will find ways of defeating your efforts, for the same reason it ended up like it is now.

For example, separating high street banks from investment firms won’t really achieve much. People will find other ways to gamble bank money on foolish investments, if you haven’t actually addressed the reasons why such investments are made in the first place. Similarly for carbon capture and storage — diverting some percentage of the carbon that would go into the atmosphere via (expensive) CCS won’t help if our use of fossil fuels continues to grow at the rate it has done in the past. The fundamental problem of exponential growth in fossil fuel use will always outstrip our attempts to sequester some of it. There’s also the problem that on the timescales that matter (decades to centuries), it’s not clear the carbon will stay put. Oh, and CCS is only ever likely to be feasible on large, static sites like big power plants, so won’t make any different to emissions from transport, aviation, agriculture, etc.

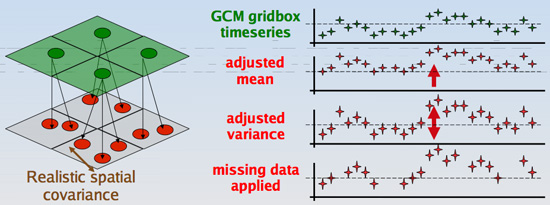

#9. Change the length of delays, relative to rate of system change

- Climate System: Speed up widescale deployment of clean energy technologies. Speed up the legislative process for climate policy. Speed up implementation of new standards for emissions. Use a faster ramp up on carbon pricing. Lengthen the approval process for new fossil fuel plants, oil pipelines, etc.

- Financial System: Lengthen the approval process for risky loans, mergers, etc. Speed up implementation of government jobs programs. Slow down economic growth (to remove the boom and bust cycles).

Often, these kinds of change can be very powerful leverage points, but many of the delays in large systems are impossible to shorten – things take as long as they take. Also, as Meadows points out, most people try to shift things in the wrong direction, as many of these changes are counter-intuitive. For example, reducing the delay in money transfer times just increases chance of wild gyrations in the markets. Governments around the world usually seek to maximize economic growth, when often they should be trying to dampen it.

#8. Increase the strength of negative feedback loops relative to the impacts they try to correct against.

- Climate System: Make the price of all goods reflect their true environmental cost. Remove perverse subsidies to fossil fuel companies (the cost of extraction & processing should be a negative feedback loop on dependence on fossil fuels); introduce better monitoring and data collection for global carbon fluxs, to more quickly assess impacts of different actions.

- Financial System: More transparent democracy to allow people to vote out corrupt politicians more quickly; Remove subsidies, bailouts, etc (these distort the negative feedbacks that keep the financial system stable); protection for whistleblowers; more scrutiny of boardroom pay rises by unions and shareholders.

Negative feedbacks are what tend to keep a system stable. If the system is changing too quickly, or is subject to instability, identifying the negative feedbacks, and working to strengthen them, can often yield simple and effective leverage points. All of the examples here a likely to be more effective at fixing the respective systems than anything we’ve mentioned so far. Some of them rely on people acting as negative feedbacks. For example, by offering better protection for whistleblowers, you create a culture in which the people are less likely yield to corrupting influences.

#7. Reduce the gain around positive feedback loops

- Climate System: Higher energy efficiency standards (this dampens the growth in energy demand); Green development – mechanisms that allow people to improve their quality of life without needing to increase their use of fossil fuels; wider use of birth control to curb population increases.

- Financial System: Punish bankers who make reckless investment decisions (discourages others from following suit). Use a more progressive tax structure and introduce very high inheritance taxes (these prevent the rich from getting ever richer). High quality & free public education (to prevent the rich from forming privileged elites). Forgive all student loans on graduation (ends the cycle of individual indebtedness)

- Both Systems: Slower economic growth (above, I described this as a “length of delay” issue – as slower growth can allow other processes, such as clean energy technology to keep up; but more importantly, economic growth is itself a positive feedback loop that drives ever more resource consumption, financial fluctuations and environmental degradation.

Runaway feedback loops inevitably destroy a system, unless some negative feedback loops kick in. In the long run, a negative feedback always kicks in: we use up all the resources, we kill off most of the population, we bankrupt everyone. But by that time the system has already been destroyed. The trick is to dampen the positive feedbacks long before too much damage is done, and this is often much more effective than trying to boost the strength of countervailing negative feedbacks. For example, if we want to address inequality, using tax structures that stop the rich getting ever richer is much more effective than creating anti-poverty programs aimed at mopping up the resulting inequalities.

Economic growth is an important example here. Remember that economic growth is a measure of the change in GDP over time. And GDP itself is a measure of the volume of financial transactions, or in other words, how fast money is flowing through the system. Accelerating these money flows makes all the instabilities in the financial system much worse. Worse still, one of the primary ways that GDP grows is by ever accelerating consumption of resources, so you get a self-reinforcing positive feedback loop between over-consumption and economic growth.

#6. Change the structure of information flows, to alter who does (or does not) have access to information

- Climate System: Get IPCC assessment results out to the public faster, and in more accessible formats. Put journalists in touch with climate scientists. Label products with lifecycle carbon footprint data. Put meters in cars showing the total cost of each journey.

- Financial System: Publish pay and benefit rates for all employees of private companies. Publish details of all political donations. Increase government oversight of financial transactions.

- Both Systems: Open access to data, e.g. on campaign financing, carbon emissions, etc; Require all lobbyists and think tanks to publish full details on funding sources.

Changing the flows of information about a system is generally much easier and cheaper than changing any other aspect of a system, which means these can be very powerful leverage points. Many of these examples are powerful enough to cause significant changes to the underlying behaviour in the system, because they expose problems to the people that shape the behaviour of the system.

Providing people with full information about the true cost of things at the point they use them is a very powerful inducement to change behaviour. Meadows gives an example of electricity meters in the front hall of a home, rather than in the basement. Another one that bugs me is the information imbalance between different transportation choices. We always know how exactly how much a trip will cost by public transit, but the cost of driving is largely invisible (paying to fill up, paying the insurance and maintenance bills, etc are too far removed from the actual per-journey decisions). Some potential fixes are very simple: Google maps could show the cost, as well as the distance & time, when doing journey planning.

#5. Change the rules of the system (incentives, punishments, constraints)

- Climate System: Government grants for energy efficiency projects. Free public transit. Jail-time for executives whose companies break carbon emissions rules. Mandatory science comprehension testing for anyone standing for public office.

- Financial System: Give workers the right to vote on boardroom pay rates. New regulations on what banks can and cannot do with investors’ money. Remove immunity from prosecution for politicians. Ban all private funding of political campaigns.

- Both Systems: Strict limits on ownership of media outlets. New ethics rules for journalists and advertisers.

These changes tend to impact the behaviour of the system immediately (as long as they’re actually enforced), and hence can have very high leverage. Unfortunately, one of the problems with fixing both the climate and financial systems is that our systems for changing the rules (e.g. legislative processes) are themselves broken. Large corporations (especially fossil fuel companies) have, over a period of many years, deliberately co-opted legislative processes to meet their own goals. Where once it might have been possible for governments to pass new laws to address climate change, or to change the way governments allocate resources, now they cannot, because these processes no longer act in the interest of the people. Similarly, the mainstream media has been co-opted by the same vested interests, so that people are fed little more than propaganda about how great the system is.

#4. Nurture the power to add, change, evolve or self-organize system structure

- Climate System: Evidence-based policymaking. Resilient communities such as Transition Towns (these empower individual communities to manage their own process of ending dependence on fossil fuels).

- Financial System: Switch from private companies to credit unions and worker-owned cooperatives.

- Both Systems: Change to proportional representation for elections (this gives a more diverse set of political parties access to power, and helps voters feel their vote counts). Celebrate social diversity and give greater access to political power for minorities (this removes the tendency to have one dominant culture, and hence helps build resilience).

Systems gain the ability to evolve because of diversity within them. In biology, we understand this well – the diversity of an ecosystem is essential for evolutionary processes to work. Unfortunately, few people understand this matters just as much for social systems. Societies with a single dominant culture tend to find it very hard to change, while societies that encourage and promote diversity are also laying the foundations for new ideas and new forms of self-organization to emerge.

The political culture in the US is case in point. US politics is dominated by two parties that share an almost identical set of cultural assumptions, especially to do with the capitalist system, the role of markets, and the correct way to manage the economy. This makes the US particularly resistant to change, no matter how much the evidence accumulates that the system isn’t working.

#3. Change the goal of the system

- Climate System: Don’t focus on emission reduction, focus on eliminating each and every dependency on fossil fuels. Don’t focus on international negotiations towards a treaty, focus on zero-carbon strategies for each city or region.

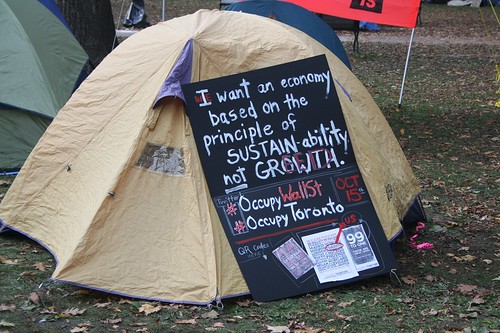

- Financial System: Stop chasing short-term corporate profits as the primary goal of the economy. Corporations should aim for sustainability rather than growth. Instead of measuring GDP, measure gross national happiness.

- Both Systems: Recognize and challenge the hidden goals of the current system, such as: the tendency for large corporations to maximize their power and influence over national governments; the desire to control access to information via media conglomerates; the desire of ruling elites to perpetuate their control.

Of course, changing the goal of the system is hard, because it means changing people’s perceptions of the system itself. It requires people to be able to step outside the system and see it from a fresh perspective, to identify how the dominant goals of the system shape its structure and operation. In short, it requires people to be systems thinkers.

There’s one kind of goal change related to the climate system that troubles me: instead of trying to prevent climate change, we could instead focus on survival strategies for living on a hotter planet. Given what we understand of the impacts on food, water and habitable regions, this would only be possible for a much smaller human population, and so it entails giving up trying to save as many people as possible. But it’s a very simple leverage point. The problem is that there are both ethical and practical reasons to reject this approach. The ethical reasons are well understood. The practical problem is that humans are very effective at fighting like crazy over diminishing resources, so it’s hard to see how this approach would work in the face of growing waves of climate refugees.

#2. Change the mindset or paradigm out of which the system arises

- Climate and Financial Systems: Triple-bottom line accounting (forces companies to balance social and environmental impact with profitability). A shift in mindset from consumerism to living in harmony with the environment. A shift from material wealth as a measure of success to (say) social connectedness. A shift in mindset from individualism to community. A shift from individual greed to egalitarianism.

Paradigm shifts are hard to achieve, but when they happen, they transform the systems that arose in the previous paradigm. Much of the root cause of current problems with climate change and financial instability are due to the dominant paradigm of the last thirty years: blind faith in the free market to fix everything, along with accumulation of wealth and material assets as a virtue.

#1. The power to transcend paradigms

- Climate and Financial Systems: Learn systems thinking and gain the ability to understand a system from multiple perspectives; Realise that system structure and behaviour arises from dominant paradigm. Explore how our own perspectives shape our interactions with the system…

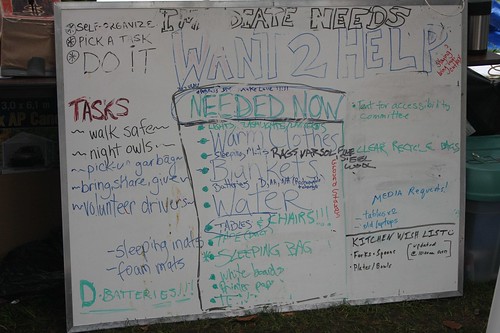

- … And then take to the streets.

Postscript: Notice that as we proceed down the list, and look at more fundamental changes to the systems, the solutions for climate change and the financial crisis start to merge. Also notice that in both cases, many of my examples aren’t what climate scientists or economists generally talk about. We need to broaden the conversation.

Our specialissue of IEEE Software, for Nov/Dec 2011, is out! The title for the issue is

Our specialissue of IEEE Software, for Nov/Dec 2011, is out! The title for the issue is