Next Wednesday, we’re oganising demos of our students’ summer projects, prior to the Science 2.0 conference. The demos will be in BA1200 (in the Bahen Centre), Wed July 29, 10am-12pm. All welcome!

Here are the demos to be included (running order hasn’t been determined yet – we’ll probably pull names out of hat…):

- Basie (demo’d by Bill Konrad, Eran Henig and Florian Shkurti)

Basie is a light weight, web-based software project forge with an emphasis on inter-component communication. It integrates revision control, issue tracking, mailing lists, wikis, status dashboards, and other tools that developers need to work effectively in teams. Our mission is to make Basie simple enough for undergraduate students to master in ten minutes, but powerful enough to support large, distributed teams. - BreadCrumbs (demo’d by Brent Mombourquette).

When researching, the context in which a relevant piece of information is found is often overlooked. However, the journey is as important as the destination. BreadCrumbs is a Firefox extension designed to capture this journey, and therefor the context, by maintaining a well structured and dynamic graph of an Internet browsing session. It keeps track of both the chronological order in which websites are visited and the link-by-link path. In addition, through providing simple tools to leave notes to yourself, an accurate record of your thought process and reasoning for browsing the documents that you did can be preserved with limited overhead. The resulting session can then be saved and revisited at a later date, with little to no time spent trying to recall the relevance or semantic relations of documents in an unordered bookmark folder, for example. It can also be used to provide information to a colleague, by not just pointing them to a series of web pages, but by providing them a trail to follow and embedded personal notes. BreadCrumbs maintains the context so that you can focus on the content. - Feature Diagram Tool (demo’d by Ebenezer Hailemariam)

We present a software tool to assist software developers work with legacy code. The tool reverse engineers “dependency diagrams” from Java code through which developers can perform refactoring actions. The tool is a plug-in for the Eclipse integrated development environment. - MarkUs (demo’d by Severin Gehwolf, Nelle Varoquaux and Mike Conley)

MarkUs is a Web application that recreates the ease and flexibility of grading assignments with pen on paper. Graders fill in a marking scheme and directly annotate student’s work. MarkUs also provides support for other aspects of assignment delivery and management. For example, it allows students or instructors to form groups for assignment collaboration, and allows students to upload their work for grading. Instructors can also create and manage group or solo assignments, and assign graders to mark and annotate the students’ work quickly and easily. - MyeLink: drawing connections between OpenScience lab notes (demo’d by Maria Yancheva)

A MediaWiki extension which facilitates connections between related wiki pages, notes, and authors. Suitable for OpenScience research communities who maintain a wiki collection of experiment pages online. Provides search functionality on the basis of both structure and content of pages, as well as a user interface allowing the customization of options and displaying an embedded preview of results. - TracSNAP – Trac Social Network Analysis Plugin (demo’d by Ainsley Lawson and Sarah Strong)

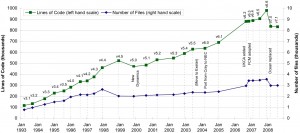

TracSNAP is a suite of simple tools to help contributors make use of information about the social aspect of their Trac coding project. It tries to help you to: Find out which other developers you should be talking to, by giving contact suggestions based on commonality of file edits; Recognize files that might be related to your current work, by showing you which files are often committed at the same time as your files; Get a feel for who works on similar pieces of functionality based on discussion in bug and feature tickets, and by edits in common; Visualize your project’s effective social network with graphs of who talks to who; Visualize coupling between files based on how often your colleagues edit them together. - VizExpress (demo’d by Samar Sabie)

Graphs are effective visualizations because they present data quickly and easily. vizExpress is a Mediawiki extension that inserts user-customized tables and graphs in wiki pages without having to deal with complicated wiki syntax. When editing a wiki page, the extension adds a special toolbar icon for opening the vizExpress wizard. You can provide data to the wizard by browsing to a local Excel or CSV file, or by typing (or copying/pasting) data. You can choose from eight graph types and eight graph-coloring schemes, and apply further formatting such as titles, dimensions, limits, and legend position. Once a graph is inserted in a page, you can easily edit it by restarting the wizard or modifying a simple vizExpress tag.

[Update: the session was a great success, and some of the audience have blogged about it already: e.g. Cameron Neylon]