There has been a strong thread at the AGU meeting this week on how to do a better job of communicating the science. Picking up on the workshop I attended on Sunday, and another workshop and townhall meeting that I missed, came two conference sessions, the first, on Tuesday, on Providing Climate Policy Makers With a Strong Scientific Base, and the second on Wednesday, on Education and Communication for Climate Literacy and Energy Awareness.

I’ll get to the speakers at these sessions in a moment. But first, I need to point out the irony. While the crazier sections of the blogosphere are convinced that scientists are involved in some massive conspiracy with policymakers hanging on their every word, the picture from within the scientific community is very different. Speaker after speaker expressed serious concerns that their work is being ignored, and that policymakers just don’t get it. Yet at the same time, they seemed to have very little clue about the nature of this communication problem, nor how to fix it. In fact, a number of speakers came across as charmingly naive, urging the audience to put a bit more effort into explanation and outreach. The notion that these completely guileless scientists could be conspiring to hide the truth is truly ludicrous.

So why so many speakers and sessions focussing on science communication? Why the sense of failure? Well, on the one hand there was the steady stream of news about a lack of progress in Copenhagen, building on a year of little progress in the US Congress. On the other hand is the widespread sense in the AGU community that the IPCC AR4 is out of date, with the new science of the last 4-5 years dramatically removing some of the remaining uncertainties (see Alley’s talk for one example), and, in nearly every case, confirming that the AR4 projections understate the magnitude of the expected warming and its impacts. Perhaps the best summary of this is the Copenhagen Diagnosis, which documents how…

…several important aspects of climate change are already occurring at the high end, or even beyond, the expectations of just a few years ago. […] global ice sheets are melting at an increased rate; Arctic sea ice is thinning and melting much faster than recently projected, and future sea-level rise is now expected to be much higher than previously forecast.

As an aside, the European EGU meeting in April lacked this strong sense of communication failure. Could this be a mark of the peculiarly American (oh, and Canadian) culture of anti-science? of lack of science literacy? Or perhaps a sense that the US and Canada are lagging far behind on developing science-based policy?

Anyway, on with the sessions. For the session on “Providing Climate Policy Makers With a Strong Scientific Base”, the first speaker was David Carlson, director of the International Polar Year (IPY) programme office. He argued that scientists are definitely not having the influence on policy that they should and the weakness of any likely agreement in Copenhagen will prove this. He described a number of examples of science communication in policymaking, analyzing whether the transmitters (scientists) and/or the receivers (policymakers) did a good job.

The first example was on ocean fertilization. The Solas study demonstrated that ocean fertilization will be ineffective. This message was received by the IMO convention on marine pollution, which issued a policy statement noting with concern the potential for negative impacts from ocean fertilization. There was one small problem – the policy was widely interpreted to mean all ocean fertilization is bad, which then prevented small scale experiments as part of IPY. So, the transmitter was good, receiver even better, but this led to unintended consequences.

Another example was a US senate heraring that David appeared at earlier this year, for the Kerry-Boxer bill. The hearing focused on the arctic, and included three senators, several senior scientists, lobbyists, a consultant, and a representative from an NGO. (note: David wasn’t complimentary about the roles of the non-scientists: the lobbyists were there to promote appropriations, the consultant was there to promote his previous work, and the NGO rep claimed “the dog ate my homework”). As the chair didn’t hear a clear message from the participants, the hearing was held open, and they were invited submit a joint report in 48 hours. David circulated a draft summary within 8 hours to others, but got no response. In the end, it appears each participant submitted their own text. The Kerry-Boxer bill was introduced in the senate a few weeks later, containing no language at all about the impacts on the arctic. His conclusion is that a failure to work together represented a substantial lost opportunity. And this theme (of acting as individuals, and failing to coordinate, cropped up again and again in the talks).

The next speaker was Ed Struzik, science journalist and author. Ed characterized himself as the entertainer for the session, and laid on a beautiful series of photographs to illustrate his point that climate change is already having a dramatic impact on the acrtic, but it’s a very complicated story. For example, even just defining what constitutes the arctic is difficult (North of arctic circle? Area where warmest summer month averages 9ºC?). It’s a very varied geography (glaciers, tundra, forest, smoking hills, hot springs, river deltas, lakes, etc). It’s a sacred place “where god began” for native peoples. And lots of fresh clean water: big rivers. Arctic wildlife. And while many of the impacts of climate change are frighteningly negative, not all are: For example the barren ground grizzlies will do well – warming will mean they don’t need to hibernate as long. And more ominously, a warming arctic creates opportunities to exploit new fossil fuel reserves, so there are serious economic interests at stake.

James Mueller from the office of US senator Maria Cantwell gave an insider’s view of how the US senate works (and a little plug for Cantwell’s CLEAR Act). Unlike most speakers in the session, he argued that scientists have done a good job with science communication, and even claimed that behind closed doors, most senators would agree climate change is a big problem; the challenge is reaching agreement on what to do about it. He suggested scientists need a more realistic view of the policy process – it takes years to get major policy changes through (cf universal healthcare), and he takes it as a good sign that the House vote passed. He also argued that less than twenty years from the first IPCC report, the science is well-developed and accepted by the majority of non-scientists. In contrast, energy economists have a much wider disagreements, and are envious of the consensus among climate scientists.

James then described some difficulties: the drive towards specialization in the science community has led to isolation among climate scientists and policy experts. There’s a lack of math and science literacy among staff of the politicians. And, most importantly, the use of experts with “privileged information” runs counter to democracy, in that it’s difficult to maintain accountability in technocratic institutions, but difficult to get away from the need for specialist expertise.

However, I couldn’t help feeling that he missed the point, and was just giving us political spin to excuse inaction. If the vast majority of senators truly understood the science, there would have had no problem getting a strong bill through the senate this year. The problem is that they have a vague sense there is a problem, but no deep understanding of what the science really tells us.

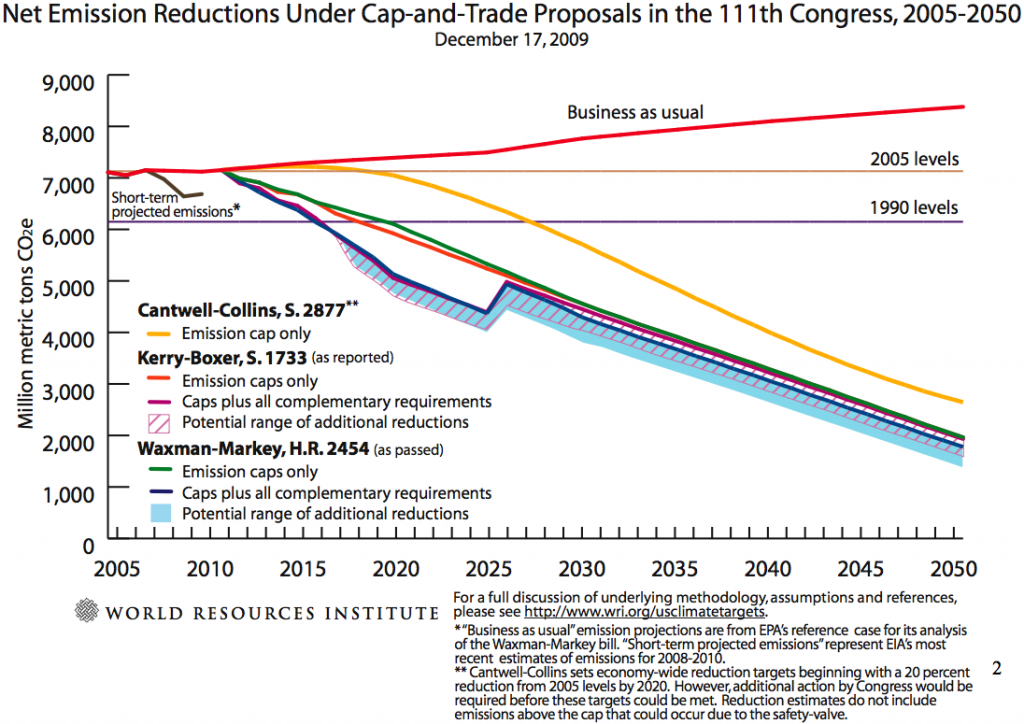

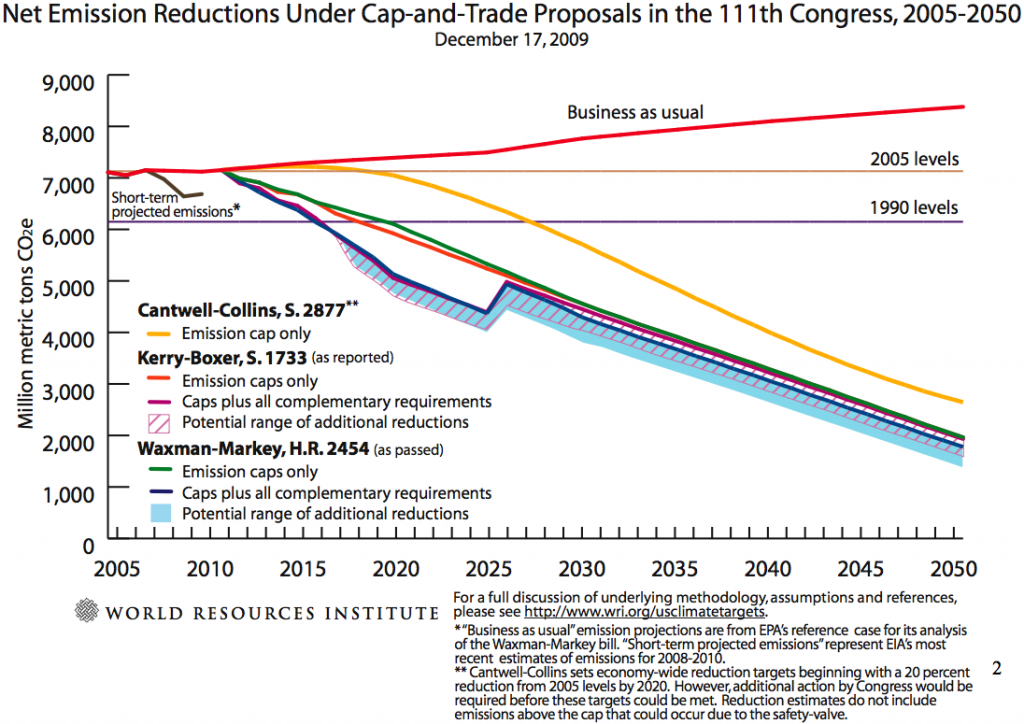

Amanda Staudt from the National Wildlife Foundation dissected the way in which IPCC recommendations got translated into the details of the Waxman-Markey bill and the Kerry-Boxer bill (drawing on the analysis of the World Resources Institute):

WRI analysis of the various bills of the 111th Congress

The process looks like this:

(1) Politicians converged on 2°C temperature rise as an upper limit considered “safe”. The IPCC had a huge influence on this, but (as I noted above) recent advances in the science were not included.

(2) Then policy community needed to figure out what level of greenhouse gas emissions would achieve this. They looked at table 5.1 in the IPCC Synthesis report:

…and concentrated on the first row. The fact that they focussed on the first row is a remarkable achievement of the science, but note that these were never intended to be interpreted as policy options; the table was just a summary of six different scenarios that had been considered in the literature. What was really needed (and wasn’t available when the IPCC report was put together) is a more detailed analysis of even more aggressive emissions scenarios; for example, the study that Meinhausen did earlier this year that gave a 50:50 chance of staying within 2°C for 450ppm stabilization.

(3) Then they have to determine country-by-country emissions reductions, which is what the “Bali box” summarizes.

(4) Then, what will the US do? Much of this depends on the US Climate Action Partnership (USCAP) lobbying group. The USCAP blueprint recommended an 80% reduction by 2050 (but compromised on a 1.3% reduction by 2020 – i.e. a slower start to the reductions.

The key point is that there was little role for the scientists in the later steps. Congress gets exposed to the science through a number of limited mechanisms: Hearings; 2 page summaries; briefings sponsored by interested parties (The CEI runs a lot of these!); lobby visits (there were a record number of these this year, with energy companies vastly outspending green groups); fact sheets; and local news stories in politicians’s own districts.

Amanda’s key point was that scientists need to be more involved throughout the process, and they can do this by talking more to their congresspersons, working with NGOs who are already doing the communications, and to get working on analyzing the emissions targets ready for the next stage of refinements to them. (Which is all well and good, but I don’t believe for one minute that volunteerism by individual scientists will make the slightest bit of difference here).

Chris Elfring of the National Academics Board on Atmospheric Sciences and Climate (BASC) spoke next, and gave a summary of the role of the National Academy of Sciences, including a history of the Academies, and the use of the NRC as it’s operational arm. The most interesting part of the talk was the report America’s Climate Choices, which is being put together now, with four panel reports due in early 2010, and a final report due in summer 2010.

All of the above speakers gave interesting insights into how science gets translated into policy. But none really convinced me that they had constructive suggestions for improving things. In fact the highlight of the session for me was a talk that didn’t seem to fit at all! Jesse Anttila-Hughes, a PhD student at Columbia, presented a study he’s doing on whether people act on what they believe about climate change. To assess this, he studied how specific media stories affects energy company stock prices.

He looked at three specific event types: Annoucements of record a temperature in the previous year according to NASA’s GISS data (6 such events in the last 15 years); Annoucements of sea-ice minima in the Arctic (3 such events); and annoucements of Ice shelf collapses in the Antarctic (6 such events). He then studied how these events affect the stock prices of S&P500 energy companies, which together have about a $1.2 trillion market capitalization, with 6.5 million trades per day. I.e. pretty valuable and busy stocks. The methodology, a version of CAPM, is a bit complicated, because you have to pick a suitable window (e.g. 10 days) to measure the effect, and allow a few days before the story hits the media, to allow for early leaks. And you have to compare how the company is doing compared to markets in general each day.

Anyway, the results are fascinating. Each announcement of a record hot year causes energy stocks across the board drop by 3% (which, given the size of their market capitalization, is a huge loss of value). However, when ice shelves collapse you get the opposite response – a 2.5% rise in energy stocks. And there is no significant reaction to reports about sea ice minima (despite a number of scientists regarding this as a crucial early warning signal).

The interpretation seems pretty straightforward. Record temperatures are the purest signal of climate change, and hence cause investors to pay attention. Clearly, investors are taking the risk seriously, anticipating significant regulation of fossil-fuel energy sources, and have been doing so for a long time (at least back into the mid-90s). On the other hand, collapsing ice sheets are seen as an opportunity, in that they might open up more areas for oil and gas exploration, and it seems that investors expect this to outweigh (or precede) any climate change legislation. And sea ice minima, they probably don’t understand, or end up as a mixed signal with exploration opportunities and regulation threats balancing out.

More detailed followup studies of other such news stories would be fascinating. And that’s really got me really thinking about how to effect behaviour changes…